Caffe代码中自带一些模型的例子,这些例子在源代码的models目录下,这些都是其他项目中用来训练的配置文件,学习的时候,我们没有必要完全自己从头到尾搭建自己的网络模型,而是直接使用例子中的模型,后期在这些模型上简单调整一下,一般可以满足大多数的需求。

下面我们以models/bvlc_alexnet目录下的模型配置文件为例子,训练我们自己的神经网络。

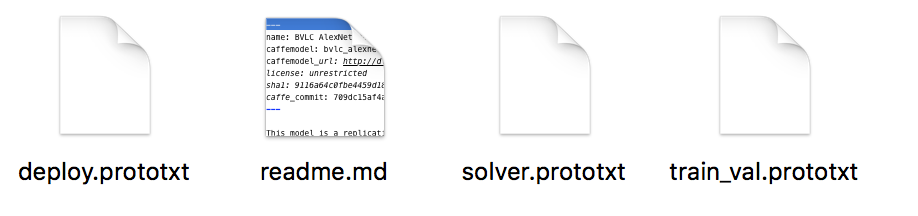

这个目录下有四个文件,如下图:

简单介绍一下这个几个文件:

train_val.prototxt用来定义训练神经网络时候的模型信息,主要定义训练时候的神经网络应该如何构造,比如分多少层,每层的行为是什么。

solver.prototxt用来定义神经网络运行的参数,比如使用CPU还是GPU,神经网络的配置文件(train_val.prototxt)的位置,以及文件名。

deploy.prototxt用来定义使用神经网络识别图片的时候使用的网络定义文件,一般是train_val.prototxt简单修改后得到的。

介绍完成神经网络模型,我们接下来需要提供我们的训练图片,用来让神经网络根据我们指定的图片进行学习。

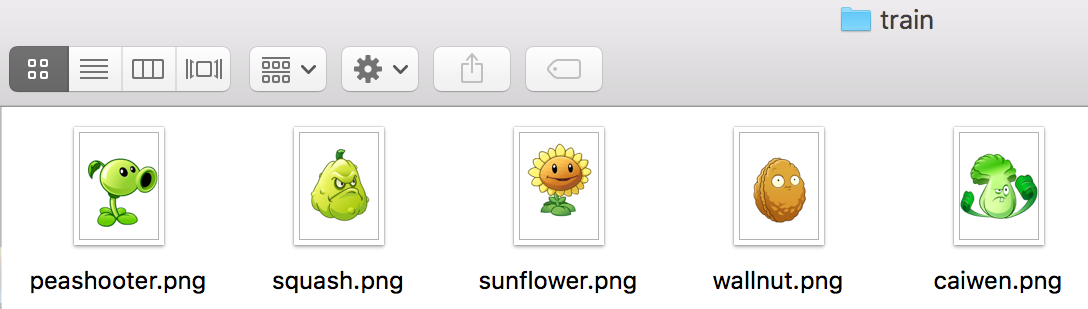

我们以iPad上的植物大战僵尸的资源图片为例子,让Caffe帮我们识别哪些是僵尸,哪些是植物。完整的例子可以从这里下载。

简单讲一下图片目录,其中的train目录下的图片为训练的图片,而detect目录为用来测试识别的图片。

本次,我们在新建的examples/PlantsVsZombies这个目录下进行操作。操作之前请参照macOS Sierra (10.12.3)编译Caffe保证可以成功编译Caffe。

注意,由于我们使用现有的神经网络模型来训练数据,因此,我们只需要提供训练图片集合就可以了,不需要提供验证图片集合,这个集合是用来调教神经网络配置文件的,我们已经有了配置好的文件,就没必要再重新调教神经网络配置了。

1.生成训练图片的索引文件train.txt,里面的内容如下:

peashooter.png 0 squash.png 0 sunflower.png 0 wallnut.png 0 caiwen.png 0 Gargantuan.png 1 Truckman.png 1 Yeti.png 1 MetalPail.png 1

注意后面的索引编号对应我们下面的标签文件的索引序号。

2.生成标签文件labels.txt,用来描述图片的内容:

0 Plant 1 Zombie

3.整合图片以及描述信息为lmdb格式,方便Caffe进行高性能的IO操作:

在examples/PlantsVsZombies目录下创建create_images.sh脚本,脚本内容如下:

#!/usr/bin/env sh

# Create the imagenet lmdb inputs

# N.B. set the path to the imagenet train + val data dirs

EXAMPLE=examples/PlantsVsZombies

TOOLS=build/tools

TRAIN_DATA_ROOT=examples/PlantsVsZombies/images/train/ #训练样本的存放路径

# Set RESIZE=true to resize the images to 256x256. Leave as false if images have

# already been resized using another tool.

RESIZE=true

if $RESIZE; then

RESIZE_HEIGHT=256 #改变图片的大小为256*256

RESIZE_WIDTH=256

else

RESIZE_HEIGHT=0

RESIZE_WIDTH=0

fi

# 判断路径是否正确的提示信息

if [ ! -d "$TRAIN_DATA_ROOT" ]; then

echo "Error: TRAIN_DATA_ROOT is not a path to a directory: $TRAIN_DATA_ROOT"

echo "Set the TRAIN_DATA_ROOT variable in create_imagenet.sh to the path" \

"where the images training data is stored."

exit 1

fi

rm -rf $EXAMPLE/train_lmdb

echo "Creating train lmdb..."

##调用convert_imageset文件转换文件格式,后面为输入参数

GLOG_logtostderr=1 $TOOLS/convert_imageset \

--resize_height=$RESIZE_HEIGHT \

--resize_width=$RESIZE_WIDTH \

$TRAIN_DATA_ROOT \

$EXAMPLE/train.txt \

$EXAMPLE/train_lmdb

echo "Done."

在代码的根目录下执行如下脚本:

$ sh examples/PlantsVsZombies/create_images.sh

4.生成图片均值文件,提高训练效率

在examples/PlantsVsZombies目录下创建make_mean.sh脚本,脚本内容如下:

#!/usr/bin/env sh EXAMPLE=examples/PlantsVsZombies DATA=examples/PlantsVsZombies TOOLS=build/tools $TOOLS/compute_image_mean $EXAMPLE/train_lmdb $DATA/mean.binaryproto echo "Done."

在代码的根目录下执行如下脚本:

$ sh examples/PlantsVsZombies/make_mean.sh

5.拷贝代码中的models/bvlc_alexnet中的文件到我们自己的工程目录中,并进行修改

$ mkdir examples/PlantsVsZombies/model/ $ cp -r models/bvlc_alexnet/* examples/PlantsVsZombies/model/

修改配置信息

$ vim examples/PlantsVsZombies/model/solver.prototxt

原始内容为:

net: "models/bvlc_alexnet/train_val.prototxt" test_iter: 1000 test_interval: 1000 base_lr: 0.01 lr_policy: "step" gamma: 0.1 stepsize: 100000 display: 20 max_iter: 450000 momentum: 0.9 weight_decay: 0.0005 snapshot: 10000 snapshot_prefix: "models/bvlc_alexnet/caffe_alexnet_train" solver_mode: GPU

修改为:

# 训练的prototxt在哪,路径 net: "examples/PlantsVsZombies/model/train_val.prototxt" # 测试要迭代多少个Batch test_iter * batchsize(测试集的)= 测试集的大小 #这个参数决定了整个的训练时间长度,如果设置为2,则在2分钟就可以,如果设置为1000,则需要28分钟以上,一般这个数字设置成分类的数量即可了,这个数字对后续的识别效果影响根据数据集的不同而有差别,如果识别率偏低,可以试试改大这个数字 test_iter: 2 # 每500次迭代,就在用测试集进行测试 test_interval: 500 # 设置初始化的学习率为0.01 base_lr: 0.01 # 权重衰减策略 lr_policy: "step" # 初始的学习率为0.01,并且每100000次迭代中进行学习率下降 gamma: 0.1 stepsize: 100000 # 每20次epoch就显示出一些数据信息 display: 20 # 迭代次数,我们数据集非常小,太高没意义,这个参数决定了整个的执行时间 5已经比较大了,后续图片增多后再调整大这个数字 max_iter: 5 # 一直都是0.9,固定不变;迭代的数据更快,步伐更快 momentum: 0.9 # 权重衰减因子为0.0005 weight_decay: 0.0005 # 每10000次迭代中,就生成当前状态的快照 snapshot: 10000 # 模型快照保存 snapshot_prefix: "examples/PlantsVsZombies/model/caffe_alexnet_train" # 可以设定GPU还是CPU solver_mode: CPU

继续调整

$ vim examples/PlantsVsZombies/model/train_val.prototxt

原始内容为:

name: "AlexNet"

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

}

data_param {

source: "examples/imagenet/ilsvrc12_train_lmdb"

batch_size: 256

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "data/ilsvrc12/imagenet_mean.binaryproto"

}

data_param {

source: "examples/imagenet/ilsvrc12_val_lmdb"

batch_size: 50

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "norm1"

type: "LRN"

bottom: "conv1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "norm1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "norm2"

type: "LRN"

bottom: "conv2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "norm2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 1000

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}

修改后为:

name: "AlexNet"

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "examples/PlantsVsZombies/mean.binaryproto"

}

data_param {

source: "examples/PlantsVsZombies/train_lmdb"

batch_size: 256

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "examples/PlantsVsZombies/mean.binaryproto"

}

data_param {

source: "examples/PlantsVsZombies/train_lmdb"

batch_size: 50

backend: LMDB

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 96

kernel_size: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "norm1"

type: "LRN"

bottom: "conv1"

top: "norm1"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "norm1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 2

kernel_size: 5

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "norm2"

type: "LRN"

bottom: "conv2"

top: "norm2"

lrn_param {

local_size: 5

alpha: 0.0001

beta: 0.75

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "norm2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool2"

top: "conv3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 384

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "conv5"

type: "Convolution"

bottom: "conv4"

top: "conv5"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

group: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 2

}

}

layer {

name: "fc6"

type: "InnerProduct"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "InnerProduct"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 0.1

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}

本质上就是调整均值文件,数据库的路径。另外就是

inner_product_param {

num_output: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

这部分是分类的数量,我们目前只有两个分类,因此,把num_output:1000(ImageNet是1000个分类)调整为num_output:2。

上面的参数,同样需要调整deploy.prototxt里面的num_output里面的参数。

6.训练神经网络

在examples/PlantsVsZombies目录下创建train_alexnet.sh脚本,脚本内容如下:

#!/usr/bin/env sh ./build/tools/caffe train -solver examples/PlantsVsZombies/model/solver.prototxt

代码的根目录下执行

$ sh examples/PlantsVsZombies/train_alexnet.sh

整个执行过程差不多需要28分钟左右才算是处理完成。

7.用植物碎片验证分类结果

$ ./build/examples/cpp_classification/classification.bin \ examples/PlantsVsZombies/model/deploy.prototxt \ examples/PlantsVsZombies/model/caffe_alexnet_train_iter_5.caffemodel \ examples/PlantsVsZombies/mean.binaryproto \ examples/PlantsVsZombies/labels.txt \ examples/PlantsVsZombies/images/detect/peashooter.png

输出结果如下:

---------- Prediction for examples/PlantsVsZombies/images/detect/peashooter.png ---------- 0.5704 - "0 Plant" 0.4296 - "1 Zombie"