Android Studio编译报错 java.lang.OutOfMemoryError: GC overhead limit exceeded

详细的崩溃信息如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

UNEXPECTED TOP-LEVEL ERROR: java.lang.OutOfMemoryError: GC overhead limit exceeded at com.android.dx.cf.code.RopperMachine.getSources(RopperMachine.java:665) at com.android.dx.cf.code.RopperMachine.run(RopperMachine.java:288) at com.android.dx.cf.code.Simulator$SimVisitor.visitLocal(Simulator.java:612) at com.android.dx.cf.code.BytecodeArray.parseInstruction(BytecodeArray.java:412) at com.android.dx.cf.code.Simulator.simulate(Simulator.java:94) at com.android.dx.cf.code.Ropper.processBlock(Ropper.java:782) at com.android.dx.cf.code.Ropper.doit(Ropper.java:737) at com.android.dx.cf.code.Ropper.convert(Ropper.java:346) at com.android.dx.dex.cf.CfTranslator.processMethods(CfTranslator.java:282) at com.android.dx.dex.cf.CfTranslator.translate0(CfTranslator.java:139) at com.android.dx.dex.cf.CfTranslator.translate(CfTranslator.java:94) at com.android.dx.command.dexer.Main.processClass(Main.java:682) at com.android.dx.command.dexer.Main.processFileBytes(Main.java:634) at com.android.dx.command.dexer.Main.access$600(Main.java:78) at com.android.dx.command.dexer.Main$1.processFileBytes(Main.java:572) at com.android.dx.cf.direct.ClassPathOpener.processArchive(ClassPathOpener.java:284) at com.android.dx.cf.direct.ClassPathOpener.processOne(ClassPathOpener.java:166) at com.android.dx.cf.direct.ClassPathOpener.process(ClassPathOpener.java:144) at com.android.dx.command.dexer.Main.processOne(Main.java:596) at com.android.dx.command.dexer.Main.processAllFiles(Main.java:498) at com.android.dx.command.dexer.Main.runMonoDex(Main.java:264) at com.android.dx.command.dexer.Main.run(Main.java:230) at com.android.dx.command.dexer.Main.main(Main.java:199) at com.android.dx.command.Main.main(Main.java:103) |

解决方法:

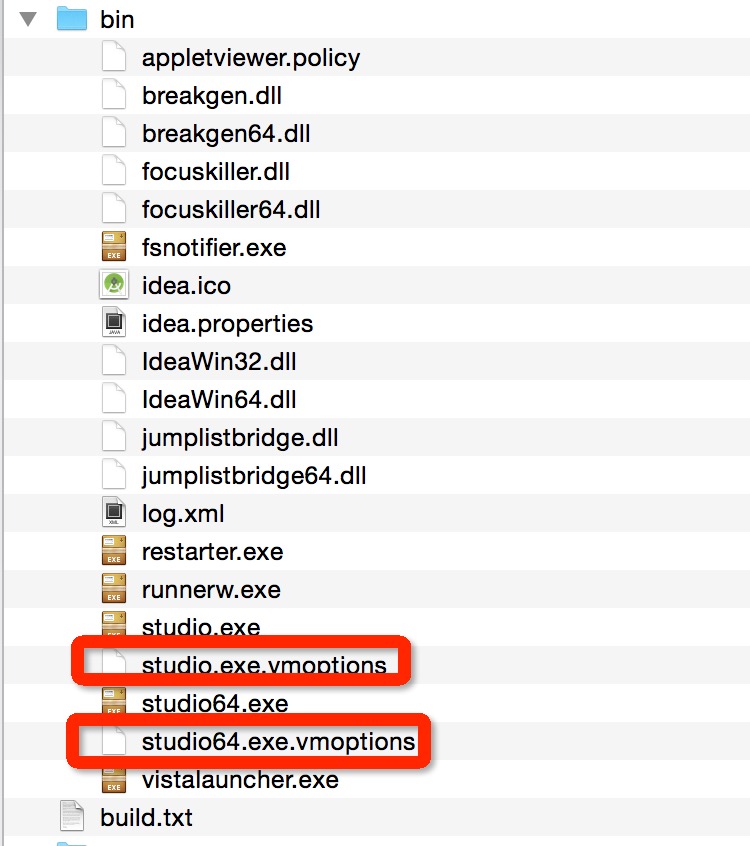

- 如果在整个工程中生效,则在build.gradle中增加如下配置:

12345678android {..............dexOptions {incremental truejavaMaxHeapSize "4g"}...............} - 如果只在单元测试的时候生效,则在build.gradle中增加如下配置:

12345678910android {..............testOptions {android.dexOptions {incremental truejavaMaxHeapSize "4g"}}...............}